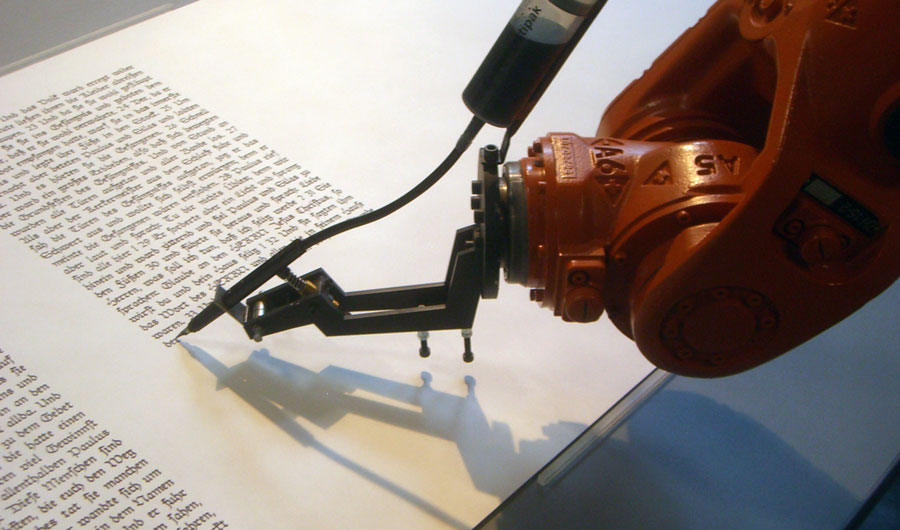

A Robot Wrote (Part of) This Article

A robot arm reproducing the calligraphic scripts of the Bible during an exhibition at ZKM Media Museum in Karlsruhe, Germany.

Image credits: Mirko Tobias Schäfer

Rights information: CC BY 2.0

(Inside Science) -- If your eyes have ever glazed over while reading scientific literature, a new system powered by artificial intelligence may be able to help. Researchers from MIT have used neural network-based techniques to summarize research papers filled with technical jargon. They published the results in the journal Transactions of the Association for Computational Linguistics.

Automating summaries is not new. You may have encountered Reddit’s AutoTLDR bot, or Auto Too-Long-Didn’t-Read bot, which automatically produces short summaries of long articles using a list of prescribed rules, such as the number of times a specific noun is mentioned. It is powered by an online engine called SMMRY and has been around since 2012.

Simple algorithms may be good enough to summarize online news articles, but summarizing information-dense scientific literature is a more daunting task for a computer. According to the authors, even current neural network techniques have trouble correlating different bits of information spread throughout long papers, like someone struggling to keep track of all the characters in a Dostoevsky novel.

The researchers stumbled upon their new approach while developing a new memory system known as Rotational Unit of Memory, or RUM, to solve physics problems such as the behavior of light in different materials. The researchers realized that the new memory system could also be used to help neural networks summarize information from scientific papers.

To test the competence of the new system, the researchers used it to summarize in a single sentence the very paper that describes RUM. Here is the result.

“Researchers have developed a new representation process on the rotational unit of RUM, a recurrent memory that can be used to solve a broad spectrum of the neural revolution in natural language processing.”

By comparison, here is how SMMRY summarizes the same paper.

While the system still can't match most humans' language abilities, it is a dramatic improvement from current programs, and could help scientists or science writers sift through large numbers of papers for the ones that catch their interest.

And, in time, such automated natural language systems are likely to get even better. Perhaps it’s time for science journalists, including yours truly, to start looking for another job.